Before tomorrow’s vision of autonomous flight takes off, its groundwork needs to be set. With EAGLE, autonomy’s basic building block – sight – is being developed by experts at Airbus Helicopters.

A lone pilot, an injured hiker.

Faced with an unexpected humanitarian flight, the pilot engages his helicopter’s image-processing system; it flies them to a hospital while the pilot divides his time between the radio, his patient, and the final approach.

This is the future as seen by Airbus’ experts in autonomous solutions. In developing groundbreaking technology for self-piloting aircraft, they envision safer and more efficient means of transport—and the benefits that go with it.

Before a car can drive or a helicopter fly by itself, it needs images of the environment around it—onboard technology for image collection and analysis, linked to its central processing system and autopilot modes. At its helicopters division, Airbus is developing EAGLE as the “eyes” of autonomous aircraft. While it may not be applied on fully autonomous vehicles yet, EAGLE (Eye for Autonomous Guidance and Landing Extension) is an important step in making them available.

What is EAGLE?

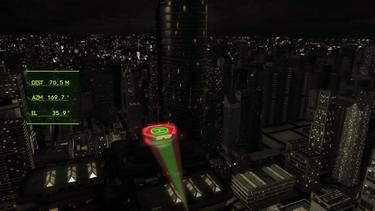

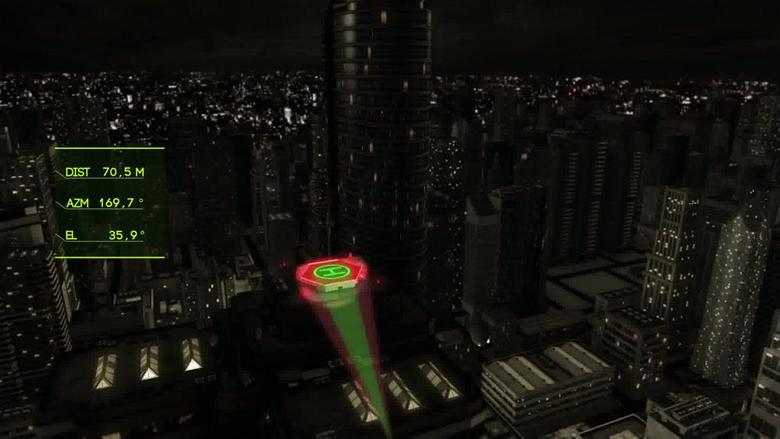

EAGLE is a real-time video processing system for aircraft. In flight, it collects data from various sources, such as cameras, and analyses it by means of a computer algorithm that has been “trained” to use the imagery in conjunction with the autopilot.

“It’s a complete loop,” explains Nicolas Damiani, Senior Expert in Systems Simulation at Airbus Helicopters. “EAGLE provides information to the autopilot. The autopilot displays how it intends to manage the trajectory to the target point. And the pilot monitors the parameters to make sure they are coherent with the image that is being acquired by EAGLE.” The ultimate goal? Fully automating the approach, thereby reducing pilot workload during this critical phase.

What is EAGLE, in tangible terms?

EAGLE is comprised of cameras and a computer. The present prototype, for example, uses three cameras inside a gimbal gyrostabilised pod. The cameras are extremely high definition; each produces around 14 million pixels.

Why three? Damiani’s team set EAGLE an ambitious test-case: detect a helipad 2,000 metres away on a shallow four-degree slope approach. The resolution needed to zero in on such a target explains the combination of cameras. As the aircraft (in the test, an H225) starts its approach, the camera with a narrow field of view sends its input to the system. Medium-range, the system switches to the camera with a larger field of view, again switching during the landing to one with a fish-eye lens.

The optronics are pure prototype at this stage. “EAGLE is compatible with different camera options,” says Damiani. “Because we need to analyse 14 million pixels at around 30 Hz, its interface is made for high-resolution video stream. But EAGLE is also capable of using input from standard cameras.”

This may mean using a simpler optical part based on cameras that are installed on today’s helicopters, to automate approaches when it isn’t necessary to see the target in such detail.

Three cameras, so what?

EAGLE also comprises a many-core processor that is able to equate advanced algorithms. These are the centre of the project’s focus, because before EAGLE can be industrialised, the algorithms and the processing unit – made of 768 shading units dedicated to graphics – as well as 12 processors must first be certified.

“As soon as an algorithm is based on image processing, it is difficult to get it certified because it relies on technologies that are currently hard to confirm,” says Damiani. “The paradox is that the algorithms, especially those that are working on machine learning, are based on training the algorithm to learn by itself. In the end, even if the performance is good, it’s very difficult to predict its result because of operational conditions that differ from the image sets used for the training.” In other words, the algorithm may demonstrate a high level of problem-solving, but nobody really knows at the end what computations it had been running.

Research and development

To function in practice, EAGLE applies mathematic algorithms to detect the expected (helipads, for example) and others based on artificial intelligence for the unexpected (“intruders” like birds, private drones, etc.). Spotting a helipad uses algorithms that are not based on machine learning—a helipad’s standard shape is one the algorithm can count on. In the case of seeing and tracking intruders, developers are investigating deep learning algorithms. “The problem is that before the algorithm can become intelligent, you have to train it,” says Damini. “You have to get data on different approaches, in different environmental conditions.” In other words, a huge amount of data that is expensive to acquire and annotate—so as to “explain” the data to the convolutional neural networks (artificial neural networks used in machine learning, usually to analyse visual imagery).

To facilitate training the algorithm, Damiani’s team is developing an approach where 90% of the training could be done through simulation. Real-world data might be mandatory to complete the learning process, but simulators may be a cost-efficient complement.

The project’s next steps are to certify the product to make it available operationally, and to research additional algorithms and optronics, with the aim of developing a small, low-cost and accurate “eye” associated to a powerful “brain.”

By using computer technology in the service of autonomous flight, Airbus seeks to offer its customers more and better solutions. Systems such as EAGLE are at the heart of Airbus’ aim to provide global vertical flight solutions. Optimising flight trajectories, reducing pilot workload—technology that lets them focus on their missions and, sometimes, take easy decisions in unexpected situations.